That’s really cool, and very smart. Definitely a cut above the neocities one. I think I’ll swap over to use searloc as my default instead. Thanks for the clarification! :)

https://linktr.ee/tomawezome Donations:

- BTC: bc1qu73wa69ey6f4qjhpg0sdtkxhusvtf68946eg6x

- XMR: 4AgRLXVNgMhTWsEjEtZajtULPi6964nuvipGXc6eNyFhWF9CSm7rRpFWQru8hmVzCkS5zBgA2ehhcbk86qLxM9MZ5pTEgYb

- 0 Posts

- 13 Comments

So this is basically doing what https://searx.neocities.org/ is doing? Or is it going some step beyond? Neat project

9·2 months ago

9·2 months agoThanks for the “incoherent rant”, I’m setting some stuff up with Anubis and Caddy so hearing your story was very welcome :)

171·2 months ago

171·2 months agoIt’s just image files, you can remove them or replace the images with something more corporate. The author does state they’d prefer you didn’t change the pictures, but the license doesn’t require adhering to their personal request. I know at least 2 sites I’ve visited previously had Anubis running with a generic checkmark or X that replaced the mascot

1·6 months ago

1·6 months agoIRC could work as a backend for text chat, Twitch based their chat system on that and heavily modified things. Jitsi, or Jami support video out of the box. Could probably also roll your own with XMPP and WebRTC

Yes, assuming video content is stored across decentralized PDS instances

I tested it with “cat” and it blocks me from seeing things I’ve reposted with the word “cat” in it, so yeah it might! :)

A quick scroll of his account on Bluesky ( https://bsky.app/profile/urlyman.mastodon.social.ap.brid.gy ) makes it pretty clear why his Discover sucks. The algorithm on Bluesky sorta works like a mirror, you get out what you put in. My feed is all art posts and wholesome memes because I follow artists, creators, and comic pages, so it sounds like he’s trained his algorithm to be full of political complaining and toxic people like him. He should probably look into the Mute Words feature and start blocking stuff he thinks is toxic!

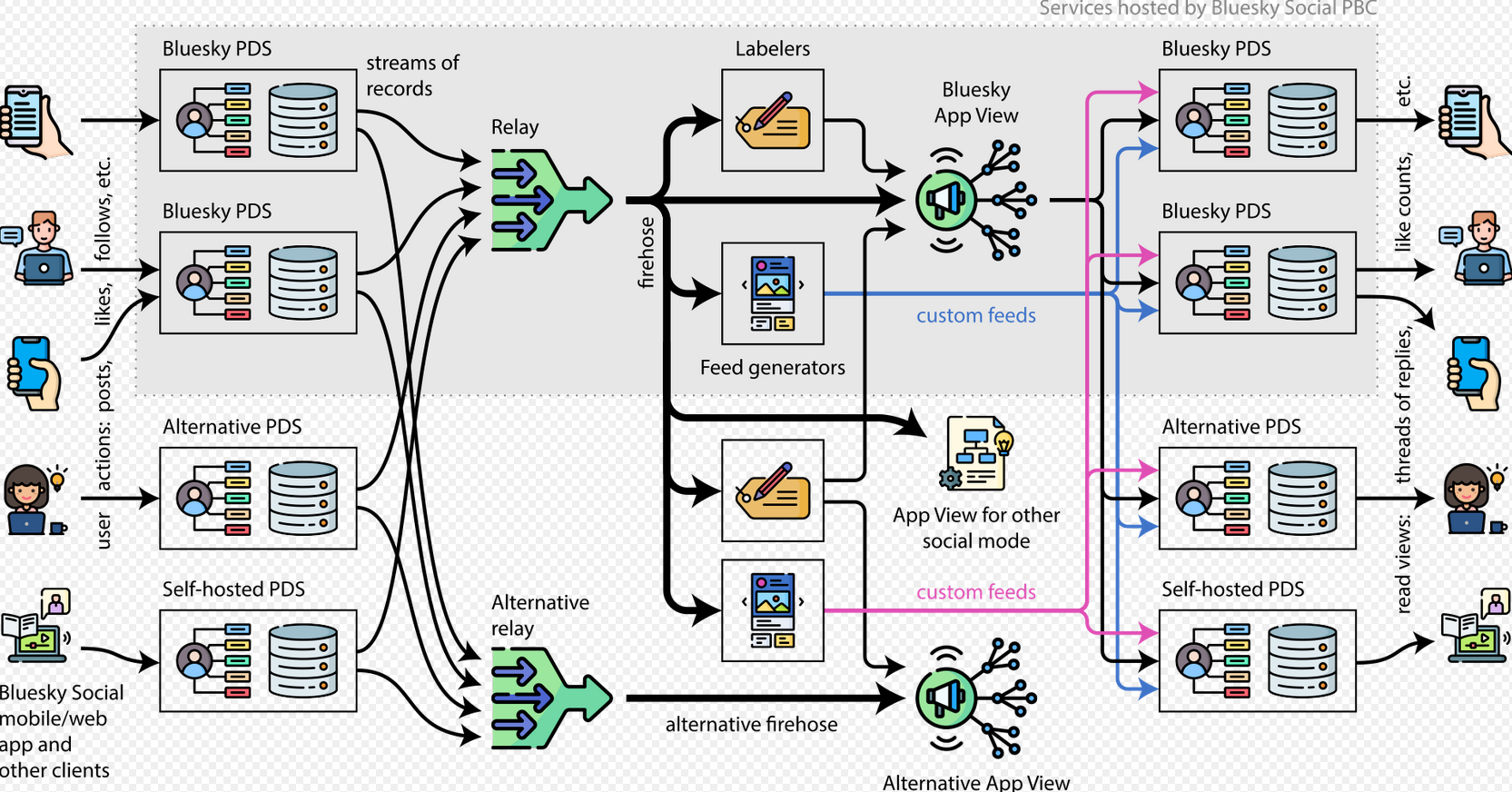

Bluesky is the first app built on the ATProtocol, its protocol for federation, sort of like how Mastodon was among the first to use ActivityPub after the overcomplexity of OStatus. The ATProtocol is a few years younger than ActivityPub, so its landscape isn’t fleshed out yet. Currently Bluesky caters more towards creators, artists, and social togetherness, whereas ActivityPub tends to lean harder into attracting techies. Both protocols can be run as independent instances, but most people are still using bsky.social for now until more instances pop up and federate together. The process for hosting a Bluesky instance is still undergoing development, but all features of it have been opened up. There exist multiple bridge systems that can interweave ActivityPub with ATProto.

https://github.com/bluesky-social/pds

https://whtwnd.com/bnewbold.net/entries/Notes on Running a Full-Network atproto Relay (July 2024)

You can run your own relays, the obstacle is that each relay takes up many terabytes. But it’s fully open.

https://github.com/bluesky-social/pds

https://whtwnd.com/bnewbold.net/entries/Notes on Running a Full-Network atproto Relay (July 2024)

https://news.ycombinator.com/item?id=42094369

https://docs.bsky.app/docs/advanced-guides/firehose

https://docs.bsky.app/docs/advanced-guides/federation-architecture#relay

TinyLLM on a separate computer with 64GB RAM and a 12-core AMD Ryzen 5 5500GT, using the rocket-3b.Q5_K_M.gguf model, runs very quickly. Most of the RAM is used up by other programs I run on it, the LLM doesn’t take the lion’s share. I used to self host on just my laptop (5+ year old Thinkpad with upgraded RAM) and it ran OK with a few models but after a few months saved up for building a rig just for that kind of stuff to improve performance. All CPU, not using GPU, even if it would be faster, since I was curious if CPU-only would be usable, which it is. I also use the LLama-2 7b model or the 13b version, the 7b model ran slow on my laptop but runs at a decent speed on a larger rig. The less billions of parameters, the more goofy they get. Rocket-3b is great for quickly getting an idea of things, not great for copy-pasters. LLama 7b or 13b is a little better for handing you almost-exactly-correct answers for things. I think those models are meant for programming, but sometimes I ask them general life questions or vent to them and they receive it well and offer OK advice. I hope this info is helpful :)

I’ve used Devuan before with decent success, I run it as a server on an ancient netbook with 1Ghz and 2GB RAM. Works pretty well, but bear in mind so much has become entangled with the expectations of systemd that as more packages get installed you may find things that break. As an example,

aptgets an error every time it does anything because Mullvad VPN software has a configuration step that expects systemd functionality, and obviously that won’t work on Devuan. The program itself works fine, just have to start it a little differently, but it means thataptfunctionality always returns an error, which itself breaks any other scripts you may run that have steps that useapt. I had to do a lot of manual patching for PiHole scripting to get that installed because every time it would run anything with apt it thought there was a showstopping error simply because Mullvad complained during apt configurations.